Threat Analysis: Deconstructing and Countering Weaponized Synthetic Media

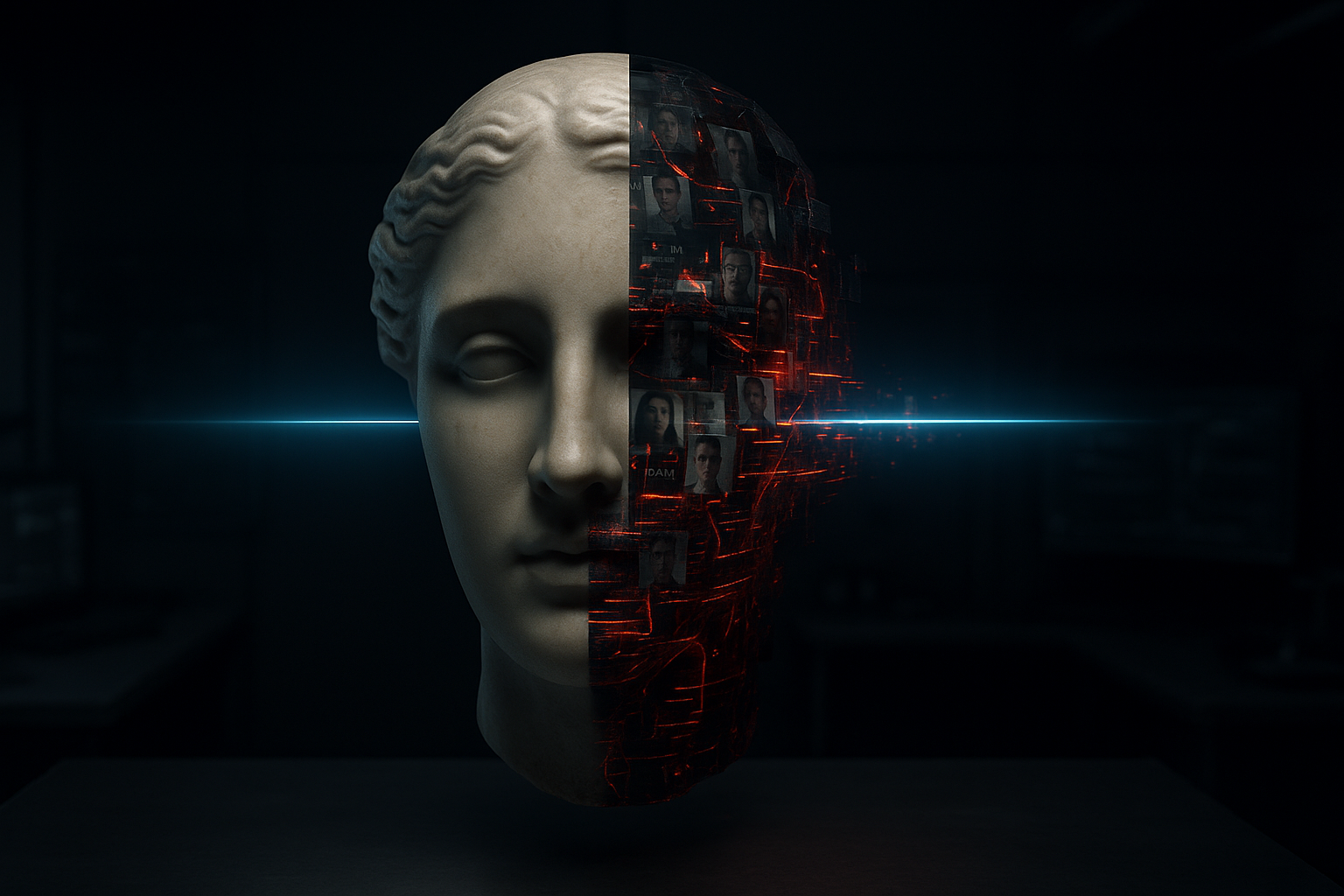

The weaponization of synthetic media, colloquially known as "deepfakes," represents a sophisticated and rapidly evolving threat vector in the modern information landscape. These are not merely manipulated videos; they are AI-generated, hyper-realistic fabrications engineered to execute campaigns of disinformation, reputational sabotage, and high-stakes fraud.

The technology, built on generative adversarial networks (GANs), has become increasingly accessible, moving beyond state-level actors to individuals with malicious intent. The primary targets are often public figures, but any individual with a digital footprint is a potential vulnerability. This intelligence briefing will deconstruct the threat and outline the definitive protocol for its mitigation.

The Architecture of Deception: Forensic Markers of Synthetic Media

While synthetic media is designed to be seamless, the AI generation process invariably leaves behind subtle, machine-level artifacts. For the trained human eye, or the sophisticated analytical engine, these markers are the signals that expose the fabrication. Analysis should focus on detecting inconsistencies in the following domains:

-

Facial Topology and Expression: Look for unnatural symmetries, anomalies in blinking patterns (or a lack thereof), and micro-expressions that are incongruent with the spoken audio.

-

Environmental Inconsistencies: Scrutinize the interplay of light and shadow on the subject. A synthetic face superimposed onto a real video will often exhibit subtle but detectable mismatches in lighting direction, color temperature, and shadow casting.

-

Audio-Visual Synchronization: Analyze the audio track for unnatural pauses, metallic reverberations, or a slight desynchronization with the subject's lip movements.

-

Provenance Verification: The most definitive countermeasure is to verify the source. A high-impact video will almost certainly have a verifiable origin. The absence of a credible source is, in itself, a primary indicator of a fabrication.

The MambaPanel Protocol: A Framework for Proactive Defense

A reactive posture is insufficient. The most effective defense against the weaponization of your likeness is a proactive, multi-phased protocol designed to control the raw material of these fabrications: your own images.

Phase 1: Proactive Asset Monitoring

A deepfake cannot be created without source material. The first phase of the protocol is to establish and maintain a comprehensive intelligence picture of your own visual assets across the open web. The MambaPanel platform is engineered for this exact purpose. By conducting regular, comprehensive scans, you can identify and neutralize unauthorized or compromised images before they can be weaponized against you.

Phase 2: Counter-Proliferation

Should a synthetic media asset be deployed, the secondary objective is to track its proliferation. Our visual intelligence engine can be used to find instances where the deepfake is being re-uploaded and disseminated across the public web. This provides a clear map of the attack's propagation, which is critical for the final phase.

Phase 3: Threat Neutralization

With a clear map of the proliferation, you can initiate a systematic campaign of neutralization. Our platform provides the necessary intelligence and tools to execute formal takedown notices under DMCA and GDPR frameworks, compelling publishers to remove the malicious content.

Conclusion: The Imperative of a Proactive Defense

The threat of weaponized synthetic media is real, but it is not insurmountable. It is a technological threat that demands a superior technological response. By moving from a reactive to a proactive security posture—controlling your source material, identifying threats as they emerge, and systematically neutralizing their spread—you can effectively defend against this new class of digital attack.

MambaPanel provides the foundational intelligence layer for this defense. Your identity is your own. We provide the protocol to keep it that way.