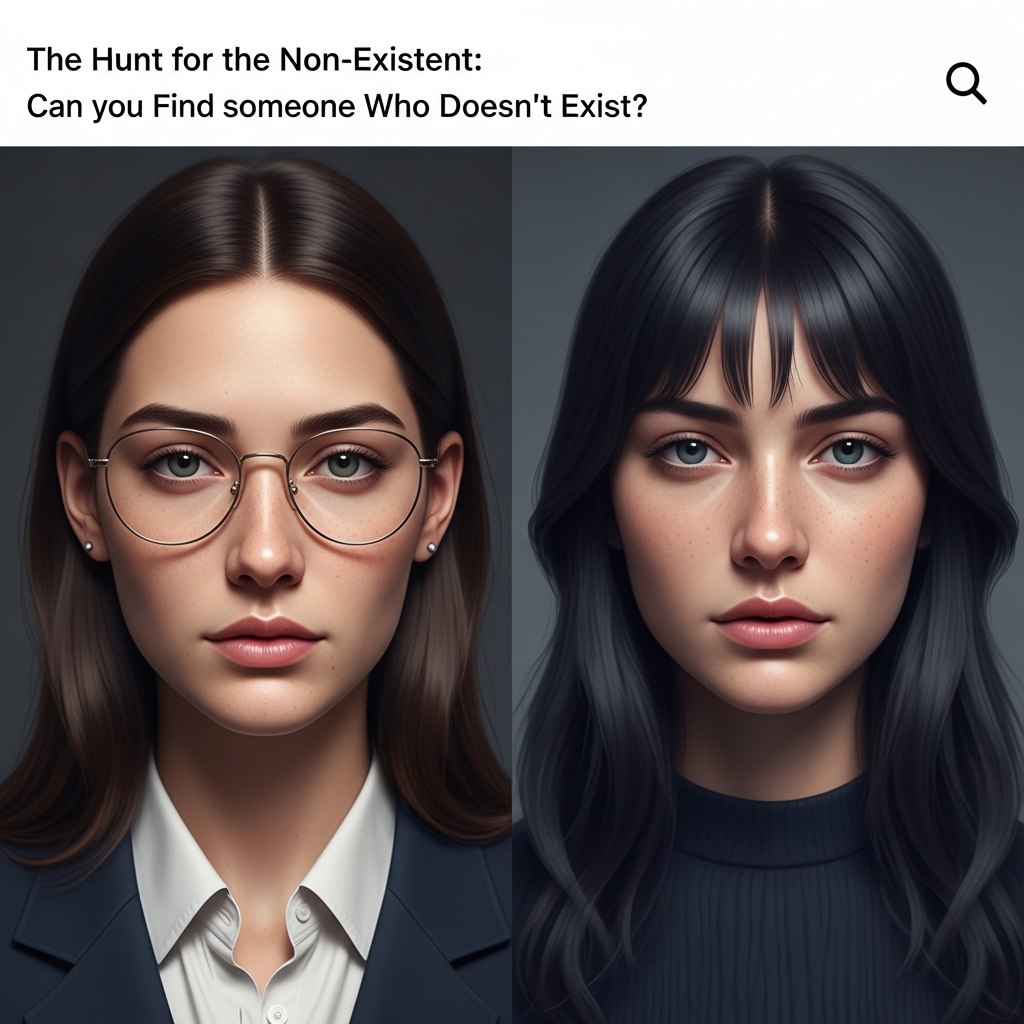

What Happens When You Run a Face Search on an AI-Generated Person?

The internet is awash in faces. Profile pictures, social media posts, news articles, advertising campaigns – faces are everywhere. But what happens when the face you're looking at isn't real? What happens when you run a face search on an AI-generated person?

The rise of sophisticated AI models has made it incredibly easy to create photorealistic faces of people who don't exist. Sites like ThisPersonDoesNotExist.com demonstrate this power, generating endless streams of unique, convincing portraits with each refresh. These AI-generated faces are becoming increasingly difficult to distinguish from real people, raising serious questions about identity, trust, and the potential for misuse.

So, can a face search engine like MambaPanel find a match for an AI-generated face? The answer is complex and depends on a few factors.

The Challenge: No Real-World Presence

The fundamental challenge is that AI-generated faces don't have a corresponding real-world identity. They haven't been uploaded to social media, featured in news articles, or captured by public cameras. They exist solely as digital creations. Therefore, a typical face search, which relies on indexing and matching against a vast database of real-world images, is unlikely to find a direct match.

Think of it this way: MambaPanel's powerful face search technology works by identifying unique facial features and patterns within an image and comparing them against our database of over 7 billion faces. If an AI-generated face has never been used online in conjunction with a real person's identity, there's simply nothing for the algorithm to match against.

When a Match *Might* Occur

While a direct match is unlikely, there are scenarios where a face search *could* yield results, even for an AI-generated image:

- Reused AI Faces: If the same AI-generated face is used across multiple platforms or websites (perhaps by different individuals creating fake profiles), a face search might identify these instances. This is because, even though the person doesn't exist, the *image* exists in multiple places. MambaPanel's ability to identify identical faces across different sources could flag these instances as potentially suspicious.

- AI-Generated Faces Based on Real People: Some AI models are trained on datasets of real faces. While the output is a new, unique face, it might bear a resemblance to individuals within the training data. In rare cases, this resemblance could be strong enough to trigger a weak match to a real person in the database. However, this is more likely to be a false positive and requires careful human review.

- Modified or Manipulated Real Faces: If a real person's face is heavily modified using AI techniques (e.g., age progression, gender swap), a face search might still identify the original individual, albeit with a lower confidence score. This is because the underlying facial structure remains, even if the appearance is altered.

- Deepfake Detection Features: While not a direct face match, some face search tools, including future iterations of MambaPanel, may incorporate features specifically designed to detect deepfakes. These features analyze subtle inconsistencies and artifacts in the image that are characteristic of AI-generated content. This could flag the image as potentially synthetic, even if a direct face match isn't found.

Practical Examples and Use Cases

Let's consider some real-world scenarios:

- Fake Social Media Profiles: Someone creates a fake social media profile using an AI-generated face. A concerned individual uses MambaPanel to perform a face search on the profile picture. While a direct match to a real person is unlikely, if the same AI-generated face is being used on other fake profiles, the search might uncover these related instances, raising a red flag.

- Online Romance Scams: A person using an AI-generated profile picture on a dating site could be identified as potentially fraudulent if the image is found to be used in multiple locations with different identities.

- News Verification: A news outlet receives a photo from an anonymous source. Before publishing, they use MambaPanel to perform a face search to verify the person's identity and credibility. If the face is AI-generated, the search would likely return no matches, prompting further investigation.

The Future of Face Search and AI-Generated Faces

The arms race between AI face generation and face recognition technology is ongoing. As AI models become more sophisticated, it will become increasingly difficult to distinguish between real and synthetic faces. This necessitates the development of more advanced deepfake detection tools and techniques.

MambaPanel is committed to staying at the forefront of this technology. We are continuously refining our algorithms and exploring new methods for identifying and flagging AI-generated content. This includes incorporating deepfake detection capabilities and enhancing our ability to identify reused AI faces.

The Human Element Remains Crucial

It's important to remember that face search technology is just one tool in the fight against misinformation and fraud. Human judgment and critical thinking remain essential. A face search result, whether positive or negative, should always be interpreted in context and combined with other forms of verification.

Conclusion: Finding Reality in a World of AI

While a face search might not always find a "person" behind an AI-generated face, it can still provide valuable insights. It can help identify reused images, flag potentially fraudulent profiles, and prompt further investigation. In a world where it's becoming increasingly difficult to tell what's real and what's not, tools like MambaPanel play a vital role in helping us navigate the digital landscape.

In a world of AI faces, use a face search to find the real ones. Try MambaPanel today!